|

Can you tell which one is real and which is virtual? Ok, so it's pretty easy, but not bad for automatic reconstruction :)

|

||

|

I will be presenting the latest (and last of) my PhD research at CVMP in November on "Safe Hulls", the first ever method to guarantee construction of a visual hull surface without phantom volumes. I'm a regular at this conference now, hopefully I'll be getting CVMP Miles which entitle me to a free publication next year. |

||

|

I will be joining the Human Communication Technologies Laboratory at the University of British Columbia in February 2008. I will be working with Sidney Fels on MyView, a flexible architecture for multiple view video processing and analysis.

|

||

|

My thesis, "High Quality Novel View Rendering from Multiple Cameras", is now available from this web site (PDF).

|

||

|

My research fellowship at UBC will involve me working on the following projects:

|

||

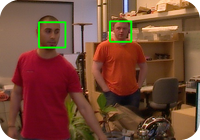

One of the projects we've been working on, called Hive, is a framework for distributed vision processing, allowing reusable modules to be connected together. Modules can be devices such as cameras or algorithms e.g. face tracking. The work will be presented at the International Conference on Distributed Smart Cameras at Stanford University in September. One of the projects we've been working on, called Hive, is a framework for distributed vision processing, allowing reusable modules to be connected together. Modules can be devices such as cameras or algorithms e.g. face tracking. The work will be presented at the International Conference on Distributed Smart Cameras at Stanford University in September.

|

||

|

Hive, our distributed vision processing system, is now available for download on Windows, Mac OS X and Linux. See the project page for details!

|

||

|

We have developed a suite of example vision systems to demonstrate the power of

Hive:

This work will be presented at the International Conference on Computer Vision Theory and Applications in Lisbon in February 2009. |

||

|

Since the beginning of January I have been teaching the Computer Graphics course of UBC's ECE degree programme. The course is for final year and master's students, and covers the high-level theory of graphics and its application through OpenGL.

|

||

|

Our mobile multi-view video browsing interface called |

||

|

We have recently developed an automatic image registration system for stitching and stacking problems, which works with low or high frequency images as well as image sets which vary in intensity (high dynamic range - HDR) and focus. This system will be presented at the International Conference on Vision Systems in Liege in October.

|

||

|

Our vision system development framework, VU, provides a new way to organise vision systems and a novel classification of the computer vision problem as a whole. Using our well-defined components for camera access, image conversion and data transport, users can construct vision systems rapidly and easily, to capture and process data. This framework will be presented at the Workshop on Multimedia Information Processing and Retrieval in San Diego in December.

|

||

|

As part of our work to create a Rapid Vision Application Development (RVAD) framework, we are developing an image retrieval abstraction called the Unified Camera Framework. We detail the current system and our initial findings in our paper Uniform Image and Camera Access and will present the work at the Workshop on the Applications of Computer Vision, part of the Winter Vision Meetings, in Snowbird, Utah in December.

|

||

|

Our latest addition to the Unified Camera Framework is an addressing mechanism (turning every camera into a network camera), improved driver model and networked camera management tools. This work detailing the entire framework will be presented at the International Conference on Distributed Smart Cameras in Atlanta in August.

|

||

|

As part of the Winter Vision Meetings I am organising the Workshop on Person-Oriented Vision which will be held in Kona, Hawaii on the 5th of January 2011. I intend the workshop to highlight ongoing research in accessible and interactive computer vision, as well as provide an opportunity for discussions on new directions for the field. We are looking to publish work on the following topics:

|

||

|

On the multimedia and HCI side of our research we have been working on navigational aids for viewing video. At the UIST poster session in New York City this October we will be presenting our work on viewing temporal video annotation and its applications for navigation.

|

||

|

The Winter Vision Meetings is a collection of workshops held annually. The next group of meetings will be held in January 2011 in Kona, Hawaii, and will host:

|

||

|

As part of our on-going effort to provide an abstraction over computer vision algorithms we will be presenting a conceptual decomposition of computer vision into the axioms of vision and a problem-based taxonomy of image registration at the Canadian Conference on Computer and Robot Vision in St. John's, NL in May.

|

||

|

One of the challenging issues with interactive video is when the clickable components move around the display; this is also a problem in real-time strategy games and some web-based interfaces. In our example of viewing a hockey game, the players are selectable in order to retrieve information or to customise the actions of the viewer (such as 'follow the player'). In our latest work, Moving Target Selection in 2D Graphical User Interfaces, we have introduced an intuitive and simple method for users to select dynamic objects. We will be presenting this work at the Interact Conference on Human-Computer Interaction in Lisbon in September.

|

||

|

SIGGRAPH is coming to Vancouver this year! We will be attending to present the latest research on our computer vision shader language, which is part of our OpenVL project. The presentation will be part of the poster session, and the poster will be on display throughout the conference.

|

||

|

At the International Conference on Vision Systems this year we will be presenting our latest research into a general abstraction for computer vision, OpenVL. OpenCV and similar systems provide sophisticated computer vision methods directly to the user, which requires a lot of knowledge and experience to use effectively. We have developed a methodology which provides the user with a mechanism to execute the required vision algorithm through a higher level interface, allowing a much larger audience to employ computer vision methods.

|

||

|

Through the creation of our computer vision abstraction we have developed a set of guidelines along the way to help steer our design decisions. We believe these guidelines will be of some use to others in the vision community, so will be presenting a few of them at the Human-Computer Interaction workshop held in conjunction with the International Conference on Computer Vision (ICCV) in Barcelona in November.

|

||

|

This year I am a programme co-chair for the Graphics, Animation and New Media (GRAND) 2012 Conference's Research Notes session, with Noreen Kamal (MAGIC, University of British Columbia) and Neesha Desai (Computing Science, University of Alberta). Notes are an opportunity for undergraduates, masters students, Ph.D. candidates and post-doctoral fellows to present their work, meet other GRAND members and foster/maintain collaborations. The third annual GRAND conference will take place 2nd-4th May 2012 in Montréal, Canada.

GRAND 2012 will showcase a multi-disciplinary programme of research and innovation spanning the broad spectrum of digital media, from researchers and institutions across Canada. The conference, held at Le Centre Sheraton, Montréal, will kick off on the evening of Wednesday 2nd May, with an opening reception showcasing Posters and Demos. Conference sessions running Thursday 3rd May, and Friday 4th May, will feature an exciting line up of Plenary Speakers, Posters and Demos, Mashups (presentations), Research Notes (organised by GRAND HQP Co-Chairs) plus the wonderfully insane 2-Minute Madness. We look forward to seeing you in digitally-fuelled, culturally vibrant Montréal! |

||

|

I am pleased to announce the 1st Workshop on User-Centred Computer Vision (UCCV'13) will be held as part of the IEEE Winter Vision Meetings in Tampa, Florida, U.S.A. in January 2013. UCCV is a one day workshop intended to provide a forum to discuss the creation of intuitive, interactive and accessible computer vision technologies. The workshop will be focussed on research from academia and industry in the fields of computer vision and human-computer interaction. The majority of researchers in computer vision focus on advancing the state-of-the-art in algorithms and methods; there is very little focus on how the state-of-the-art can be usefully presented to the majority of people. Research is required to provide new technology to address the shortcomings in the usability of computer vision. The workshop will bring together researchers from academia and industry in the fields of computer vision and human-computer interaction to discuss the state-of-the-art in HCI for Vision. We invite the submission of original, high quality research papers on user-centred, interactive or accessible computer vision. Areas of interest include (but not limited to):

|

||

|

||||

|

The schedule for ADFS has been announced - OpenVL will be presented in the Programming Languages and Models (PL) session at 1415 on Tuesday 12th June 2012 in room E/F of the Hyatt Regency, Bellevue. The session ID is PL-4189, and the title of the session is "OpenVL: A High-Level Developer Interface to Computer Vision".

|

||

|

The OpenVL team is organising the first workshop on Developer-Centred Computer Vision

There has been a relatively recent surge in the number of developer interfaces to computer vision becoming available: OpenCV has become much more popular, Mathworks have released a Matlab Computer Vision Toolbox, visual interfaces such as Vision-on-Tap are online and working, and specific targets such as tracking (OpenTL) and GPU (Cuda, OpenVIDIA) have working implementations. Additionally, in the last six months Khronos (the not-for-profit industry consortium which creates and maintains open standards) has formed a working group to discuss the creation of a computer vision hardware abstraction layer (CV HAL). Developing methods to make computer vision accessible poses many interesting questions and will require novel approaches to the problems. This one day workshop will bring together researchers in the fields of Vision and HCI to discuss the state-of-the-art and the direction of research. There will be peer-reviewed demos and papers, with three oral presentation sessions and a poster session. We invite the submission of original, high quality research papers and demos on accessible computer vision. Areas of interest include (but not limited to):

|

||

|

Today we presented our latest work on the OpenVL project to the delegates at the AMD Fusion Developer Summit in Bellevue, Washington. Based on many conversations, it seems industry really needs a solution to the developer-level computer vision problem, and there has been some excitement over the possibilities of OpenVL.

|

||

We have been invited to give a talk on the latest research on OpenVL, our developer-friendly interface to computer vision, at Qualcomm Research in Santa Clara. Qualcomm develop FastCV, a highly optimised embedded vision platform: we're looking forward to some interesting discussions!

|

|||

Khronos have invited us to give a talk on the latest research on OpenVL at their AGM (in Vancouver, coincidentally), and see how it fits in with their efforts to create an open standard for vision at the hardware level.

|

|||

|

Collaborating with Kunsan National University in Korea, we will be presenting a method for transforming segmentation algorithms to fit the OpenVL segmentation abstraction, so that mainstream developers will have access to sophisticated segmentation methods.

We will be presenting this work at the 1st Workshop on Developer-Centred Computer Vision as part of the Asian Conference on Computer Vision in Daejeon, Korea. |

||

|

The first workshop on Developer-Centred Computer Vision was held today in conjunction with ACCV!

Gregor presented the state of the art in developer-centred computer vision, followed by five excellent talks. The acceptance rate for the workshop was 24%, and had an attendance of approximately 45 people. |

||

|

Today we held the first workshop on User-Centred Computer Vision at the 2013 Winter Vision Meetings at Clearwater Beach in Tampa, Florida. Dong Ping Zhang from AMD Research gave the keynote talk on the advantages of heterogeneous computing for computer vision, attracting an audience of over 100 people. We held 9 talks over the course of the day (acceptance rate of 47% for the workshop) which attracted an average audience of 40 people. Gregor presented the OpenVL abstraction for image segmentation, demonstrating the simple description layer we use to produce segments by selecting an appropriate algorithm under the hood.

|

||

|

Our first overview paper of the central concepts of OpenVL was presented today at the Workshop on Applications of Computer Vision (to become the Winter conference on Applications of Computer Vision next year). OpenVL: A Task-Based Abstraction for Developer-Friendly Computer Vision describes how our framework is designed to provide mainstream-developers (i.e. those not trained in computer vision) with access to sophisticated methods for segmentation, correspondence, registration, detection and tracking.

We will hopefully be releasing the source to OpenVL and our segmentation methods in the near future. If you are interested in using or contributing to OpenVL, please contact me! |

||

|

We will be presenting an overview of OpenVL at the upcoming Graphics, Animation and New Media Network's AGM in Toronto in May, alongside the industry digital media conference Canada 3.0. If you're attending either and you're interested in OpenVL, contact me or come to the presentation!

|

||

|

At the International Conference on Computer and Robot Vision in Regina, Saskatchewan this May, we will be presenting our work on a foundational representation for developer-friendly computer vision as part of our OpenVL framework. The paper discusses the details of using segments as a metaphor to allow developers to intuitively describe images and vision operations, while simultaneously providing a useful representation for results.

|

||

|

The first paper on our new interface for video navigation using a personal viewing history will be presented at INTERACT in Cape Town in September. The interface is based on a similar concept to a web browser's history: we keep a record of each interval within video which has been viewed by a user, and present a management interface of the video history to allow fast navigation to previously viewed clips. The history then enables other applications such as video summarisation, authoring and sharing in a very simple way. We demonstrate in our evaluation that users are more proficient at finding previously viewed events in video using our interface, and that users would very much like to see this kind of interface integrated into YouTube and similar services.

|

||

|

We are very happy to announce the first release of OpenVL! This version provides a high-level interface to image segmentation. Results can be visualised using the included VisionGL library (for testing etc.), or extracted to use for your own applications.

The release contains C++ static library binaries for OS X (Xcode/GCC), Windows (Visual Studio 2010) and Linux (GCC), as well as all the necessary headers, and an example application. You can download it here: OpenVL-v0.1a-20130701 Please note that this is research software, so expect lots of bugs and missing features. Feedback is appreciated, so please let us know if you find a problem or would like to suggest an addition. We are also looking for academic and industry collaborations to help develop OpenVL - contact us if you're interested! Release Notes *** 2013/07/01 OpenVL v0.1a *** First release

|

||

At the annual general meeting of the Canadian GRAND network we will be presenting OpenVL's segmentation process,

a qualitative evaluation of moving target acquisition methods, authoring using a personal video navigation history

and hosting a demo session on a video player dedicated to sharing sub-intervals of video.

The meeting will take place in Ottawa in mid-May - if you're a member of the GRAND network please come and try the demos.

|

|||

This year we have seen a lot of success with accepted presentations at SIGGRAPH. I will be presenting

OpenVL as a Talk, VisionGL

as a poster and will be helping to present MyView as a poster

and Spheree as a

poster and an emerging technology. Demos of all technologies will be available, if you're attending SIGGRAPH

then please come along and try them!

|

|||